A scientific paper on selecting staff has been published recently which provides new insights on the best way to identify top performers. Researchers led by Professor Paul Sackett, from the University of Minnesota, have aggregated and re-analyzed the data from hundreds of prior studies on predicting job performance. Their meta-analysis incorporates results from tens of thousands of people working in, or applying for, over 500 different jobs. The size and scope of their analysis helps cut through the noise of individual studies to provide reliable estimates of the predictive power, or validity, of a wide range of selection methods.

What conclusions can we draw from this new study?

In many ways, the results are consistent with the last large-scale meta-analysis on this topic, a now-classic paper published by Professors Frank Schmidt and John Hunter in 1998. Across both studies, the methods with the highest validities are those that directly assess the knowledge, skills and abilities required to do the job in a structured and consistent manner. The most predictive methods from the latest study are:

| Method | Description |

| Structured interviews | Asking a consistent set of interview questions about what candidates have done or would do in job-relevant scenarios and rating their responses according to the same criteria. |

| Job knowledge tests | Testing of the knowledge / information that job holders need to know to do the job. |

| Empirically keyed biodata | Asking a standardized set of biographical questions that are statistically scored to maximize the prediction of performance. |

| Work sample tests | Asking candidates to perform part of the job and scoring their performance in a standardized way. |

| Cognitive ability tests | Testing candidates’ ability to acquire and use information to solve problems in a standardized way. |

What’s changed according to this study?

Structured interviews more predictive and unbiased than unstructured interviews

The gap between the validities of structured interviews and unstructured interviews is larger than previously assessed. According to the new paper, structured interviews have more than double the predictive power of unstructured interviews. The results also show that structured interviews have nearly a third less bias than unstructured interviews. These findings amplify previous calls to use a structured format in job interviews. This includes asking candidates the same questions, rating answers according to a guide, and ensuring all questions are directly related to the job.

Emotional Intelligence assessments included

The new study includes tests of EI in its rankings, reflecting increased interest in EI testing over the past 20 years. While not in the top five most predictive methods, the results show that EI tests are meaningfully related to job performance. This is especially true in jobs that involve high levels of emotional labor. These kinds of jobs require employees to display particular emotions (e.g., happiness) and also to process or respond appropriately to the emotions of others. Two examples are customer service positions and health care roles.

Personality traits included and importance of work-related content emphasized

Personality assessments have been examined in detail in the new study, with estimates of validity for each of the “big five” personality traits. As with previous studies, conscientiousness has the highest validity as a predictor of job performance. Conscientiousness is the extent to which a person is orderly, diligent, and self-disciplined in their behavior. The results also show that personality assessments are more predictive of job performance when candidates are asked to describe their behavior at work, rather than “in general” or with no contextual guidance. Phrasing questions about work boosts the validity of personality assessments by about a third. This result demonstrates the importance of using personality assessments that are specifically designed for work.

Race and assessment methods examined

The study also highlights the relationships between assessment method scores and race. In the United States, where the current study drew almost all of its data from, White candidates routinely get higher scores in selection processes compared to Black or African American candidates. The gap is largest for cognitive ability tests, work samples, and job knowledge tests. Personality assessments had the least adverse impact against Black or African American candidates. This new study gives a high level of prominence to these findings, reflecting the importance of reducing adverse impact in selection. The findings support using multiple methods when selecting staff to maximize predictive validity while minimizing adverse impact.

Predictive validity re-examined

Finally, it turns out that our best methods of predicting job performance are probably not as good as we thought they were. Looking just at the top five methods shown above, the new paper finds validities that are about 25% lower than those reported in previous research. I go into the reasons for this in more detail below. But briefly, it looks like the previous estimates of validity were erroneously high. The true validities of the selection methods have not changed over time, but we are now estimating validity more accurately, and more conservatively, than before.

Where do these estimates of validity come from?

Industrial and Organizational (IO) Psychologists are constantly conducting research studies on employee selection methods. Additionally, organizations sometimes make their archival data available to researchers for analysis. Researchers then compare the scores people get on one or more selection methods with ratings of their actual job performance.

The degree to which scores on a selection method predict job performance is expressed as a correlation. A correlation of 0.00 means that there is no relationship at all between the scores candidates get on the selection method and their subsequent job performance. A correlation of 1.00 means that candidate scores perfectly predict their subsequent performance levels. Most selection methods have correlations with performance between 0.20 and 0.50. A long way short of perfect, but still useful.

Over the years, IO Psychologists have conducted hundreds of these studies. Around the 1980s scientists started to combine the results of these studies to make general conclusions about which selection methods were the best overall. These combined studies are called meta-analyses. The new paper produced by Professor Sackett and his colleagues is the latest in a line of meta-analyses. It uses the most comprehensive set of prior studies available, and also makes advances in the statistical methods used to draw conclusions from these aggregated studies.

Why have estimates of validity decreased in the new study?

As noted above, the validity estimates in the new study are lower than those found in previous research. The table below shows the validity estimates from the last large-scale meta-analysis, published in 1998, along with the estimates from the current meta-analysis.

| Selection Method | Previous validity estimate (1998) | New validity estimate (2021) |

| Work sample testing | 0.54 | 0.33 |

| Empirically keyed biodata | Not Assessed | 0.38 |

| Cognitive ability testing | 0.51 | 0.31 |

| Structured job interview | 0.51 | 0.42 |

| Job knowledge testing | 0.48 | 0.40 |

The new validity estimates are lower in each case where we have comparable data. The reasons for this decrease are varied, but the biggest overall contributor is that prior studies were too liberal in the way that they corrected for an issue known as range restriction.

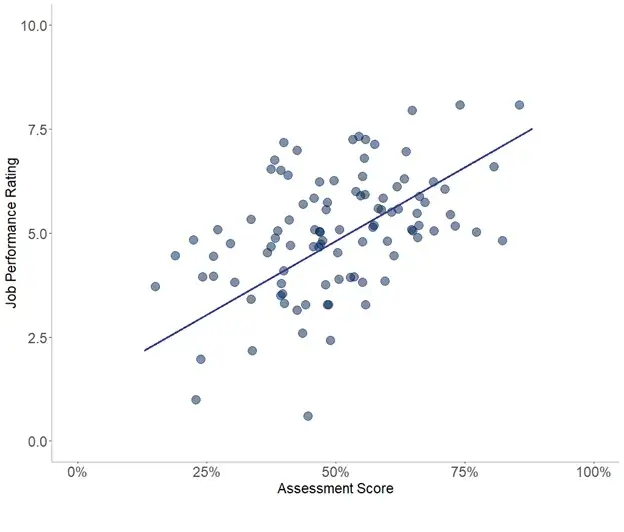

To explore this in more detail, consider the scatterplot below. This plot shows 100 people, with their scores on a selection test (out of 100) plotted on the X axis, and their job performance ratings (out of 10) on the Y axis. As can be seen, higher test scores tend to be associated with better performance ratings. The correlation depicted here is 0.45, indicating a very high level of predictive power.

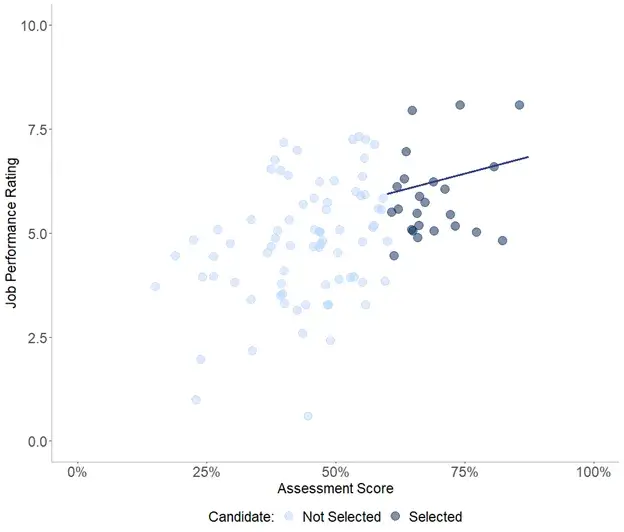

When you’re using a selection test, however, you usually don’t see the job performance of the people who scored poorly on the test because you don’t employ them to do the job. If you set your test cut-off at 60%, for example, then you only see the job performance of the people who have scored 60% or better. The scatterplot below shows this situation, with the people whose job performance you observe shown in dark blue, and the people whose job performance you don’t observe shown in light blue. The line of best fit shown here is plotted only for the dark blue points.

When you’re just looking at the people who “passed” the selection test, the relationship between test scores and job performance ratings doesn’t look that great. In this figure, the correlation between job performance and test scores for the dark blue points is 0.25. This is actually a useful level of validity, but nowhere near as strong as the “true” correlation of 0.45. In effect, the correlation you observe underestimates the validity of the test because it does not reflect how many poor performers you avoid. This issue is called range restriction, because it’s caused by a failure to observe the job performance for the full range of test scores.

Fortunately, not all studies investigating the validity of selection methods are significantly affected by range restriction. In some studies, all incumbents are assessed on the test, and so job performance scores are available for everyone, regardless of how well they scored on the test. In other studies, there is some range restriction, but it’s not nearly as severe as the example shown above.

When looking at all the studies, you can see that the more the range is restricted, the lower the correlation between assessment scores and job performance becomes. We can describe this relationship mathematically in a formula that links the degree of range restriction to the observed correlation. When researchers conduct a validity study, they mathematically adjust the observed correlation to reflect what it would have been if there was no range restriction. This adjustment allows us to get a better estimate of the true relationship between assessment scores and job performance.

The bulk of the new paper by Professor Sackett and his colleagues is actually a critique of the methods that previous studies have used to adjust for range restriction. Professor Sackett and his colleagues argue that previous researchers over-estimated how much range restriction there was in the studies they examined. Because the degree of range restriction was over-estimated, the adjustments that were applied to the observed correlations were too large. This inflated the estimates of selection method validity. The new paper uses more conservative estimates of range restriction and as a result has more conservative estimates of validity. In many instances, the authors argue, it is actually best to assume that the degree of range restriction was zero, and not correct the observed correlations at all.