What are the Best Practices for Implementing Pre-Employment Testing?

Once an employer decides to integrate pre-employment testing into the hiring process, how can he or she get the most out of it? The following best practices and tactical strategies will allow employers to streamline the recruiting process and make smarter hiring decisions with greater efficiency. We will address such frequently asked questions as:

- How should employers choose which pre employment tests to administer?

- When should tests be used?

- How much pre employment testing is appropriate?

- What scores should employers expect from their applicants?

How Do You Select the Right Pre-Employment Test for Your Organization?

Test selection is the first and probably most important step in implementing a pre-employment testing program because it is critical that organizations use tests that measure job-related abilities and skills. The key to ensuring any selection procedure is valid and effective is the so-called rule of "job-relatedness," and tests are no exception: pre-hire tests must measure skills, abilities, and traits that are relevant to the job in question. Inappropriate test selection will result in an ineffective selection methodology, and can also result in a testing program that is not legally compliant. This is why a common best practice in employment testing is to conduct a Job Requirements Analysis for a position before using tests to screen candidates. Once a company has created a job profile by describing the skills, work activities, and abilities that are associated with a given position, it is much easier to determine which tests will be the most relevant. Investigate what types of tests make the most sense for specific types of positions here.

Certain types of tests that measure general capabilities—such as critical thinking, problem-solving, and learning ability – are likely to be "job-relevant" for many different positions because they test traits that are valuable for nearly every job type. Other tests designed specifically for certain positions, such as a sales personality test — should never be used for positions for which they were not specifically designed.

Finally, since most organizations hire for a variety of positions with widely varying job requirements, it is generally advisable to choose different testing protocols for each position. There will often be common elements to the test batteries for many positions—for example, it may be appropriate to use a general aptitude test for many different positions, as explained above—but in general, the best way to ensure adherence to the rule of job-relatedness is to make test selection decisions on a position-by-position basis.

How Do You Integrate Pre-Employment Testing into Your Recruitment Process?

Once an organization has chosen which tests to use for a given position, it will need to decide at what stage of the hiring process to test applicants. There may be many factors that go into this decision, but it is generally recommended to test applicants as early as possible. Using tests early in the hiring process is an efficient and reliable way to gather objective data on candidates before deciding which candidates should move on to the next step in the process. Requiring applicants to take tests through a link included in a job board posting, for example, will help employers filter through large applicant pools, and ensure that everyone who is moving through the hiring funnel meets the basic standards for the job. This helps streamline the hiring process, saving a great deal of time that would have been spent reading resumes from unqualified applicants.

Administering tests at the beginning of the hiring process often means that candidates will take these pre-employment assessments remotely. However, many companies have initial reservations about remote testing. How can an organization be sure that the candidate is the one who is actually taking the tests? With unproctored testing, it's difficult to be sure someone isn't getting outside help. That's why Criteria Corp recommends that for aptitude and skills tests, employers confirm the offsite results by administering different versions of the tests in person to applicants who passed the initial screening. To get the most out of onsite testing, one useful strategy is to tell candidates ahead of time that they will be retested on site – that way, they know they'll be wasting their time if they don't take the remote tests honestly.

In general, remote testing is increasingly becoming the norm because the benefits of upfront testing far outweigh the negatives. One reason is that cheating may actually be less common than expected. Criteria Corp conducted a study with one of its largest customers who administers aptitude tests remotely at the front end of their hiring process, and then retests a select number of candidates later onsite. When comparing the candidates' remote test scores with their onsite test scores, the percentage of people who didn't take the test honestly offsite (i.e., without outside help) was actually quite small, much less than 2% of the applicant pool. This may be because the company explicitly describes its retesting policy when they send candidates the invitation to take the test. Being explicit about retesting eliminates the incentive to cheat, because applicants will only be wasting their own time if they take the test dishonestly.

A second reason some companies hesitate to use tests early in their hiring process is cost. When companies link to a test from a job posting, they will likely get a huge number of applicants taking the tests. If a testing service requires companies to pay per test, costs will mount quickly. Therefore, if an organization does decide to administer tests remotely, it is best to choose a provider that has a flat, subscription-based pricing model.

How Much Testing is Appropriate?

After selecting the relevant tests to administer, and deciding when to administer them in the hiring process, the next step is to decide how many tests to administer. In order to attract the best talent, it’s important to provide a positive candidate experience, and when it comes to assessments, this often means keeping the tests as short and engaging as possible.

With any step of the recruitment process, it’s natural to experience some candidate drop-off at each stage. The goal is to minimize drop-off, and filter in the candidates who are most qualified and most interested in the role. In order to achieve this goal, you can optimize the amount of testing you administer, to maximize the talent signal while minimizing candidate drop-off.

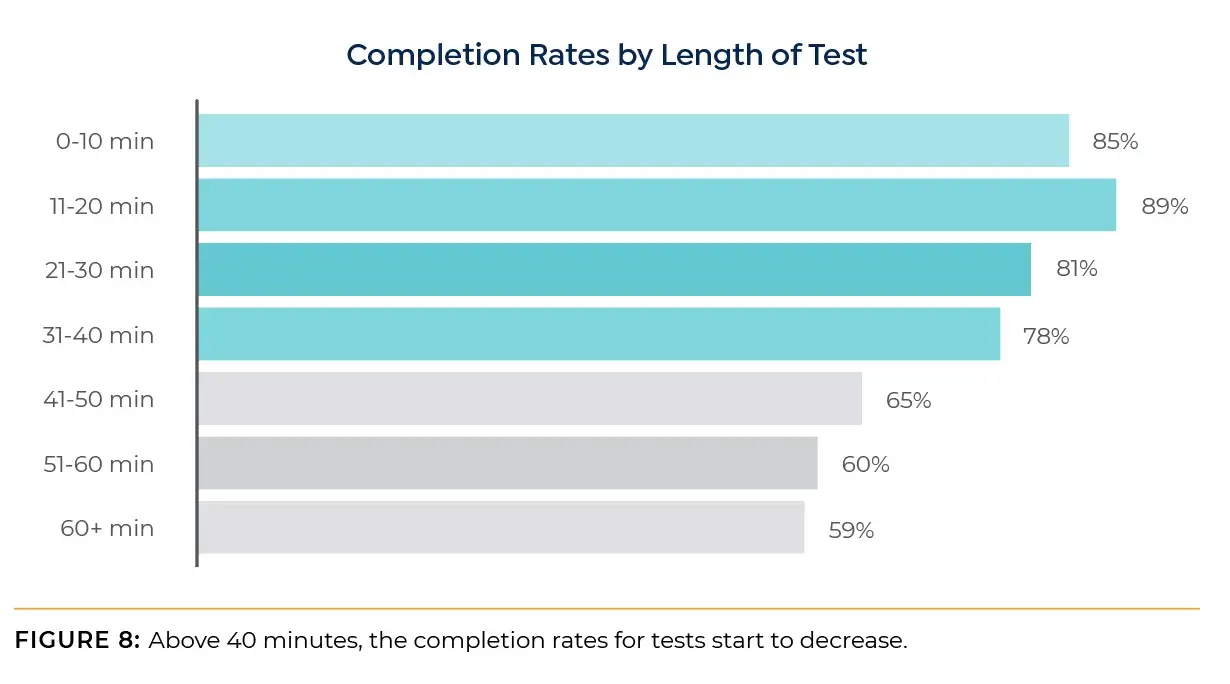

Criteria analyzed a huge volume of data (about half a million tests) to help answer this question. What we found is that organizations can expect a completion rate of around 80% for assessments up to 40 minutes (Figure 8).

After the 40-minute mark, the completion rate starts to decrease down to around 60% when the total length of testing gets beyond one hour. Therefore, a general best practice is to keep the total length of testing to 40 minutes or less.

While it’s important to minimize candidate drop-off, it’s worthwhile to understand that candidate drop-off isn’t always a bad thing. Candidates may self-select themselves out of a job that they are not invested in. Plus, the average drop-off may differ depending on where you place testing in the hiring funnel. Candidate drop-off will likely be higher earlier in the funnel when candidates are less invested. Many organizations that want to administer more than 40 minutes of assessments are able to do so by dividing the tests between different stages of the funnel – administering certain tests earlier in the funnel and administering others later, once candidates are more invested in the process.

Testing Existing Employees

When implementing a pre employment testing program, how does a company determine the appropriate scores to look for in the ideal employee? Most testing providers will be able to provide suggested score ranges for the most commonly tested positions based on large data samples they have gathered and analyzed. This can provide valuable context, especially in the earliest stages of implementing pre-employment testing at an organization.

If possible, companies should consider administering the tests to their existing employees in similar positions and then using their scores to create benchmarks for their applicants, thereby tailoring target scores to the current standards of the organization. This benchmarking approach is unlikely to help small companies where the numbers of incumbents in a given position are too small to yield significant results. For example, benchmarking will not be meaningful if a company has only 4 customer service representatives; in this type of case, it may be more efficient to rely on the data and insights from the testing provider.

Whenever practical, however, administering tests to existing employees for benchmarking purposes can yield valuable insights. For example, imagine a company is hiring medical assistants for a hospital. Medical assistants are responsible for performing basic administrative duties as well as interacting with patients and other medical staff. The company selects a basic skills test to assess verbal skills, math skills, and attention to detail. It also selects a personality test to determine if the candidates would work well with patients. To determine the scores it should be looking for in its applicants, the company administers both tests to the medical assistants currently working in the hospital. From there, the company's testing provider would be able to assist with interpreting the data and setting appropriate suggested score ranges based on the gathered data.

One common misconception about "benchmarking" is that companies should only administer tests to their best performers and then replicate their success by hiring people with similar profiles. There are two potential problems with this. First, if only the star performers are tested, employers can't be sure that the test results they achieve are actually any different from the test scores that the rest of the employee base would receive; that is, they can't be sure that there is any correlation between test scores and job performance for their population. Second, if companies insist on minimum scores that exactly match those of their top performers, they run the risk of being too restrictive with their applicant pool. To avoid this problem, it is advisable to test a wide sample of incumbent employees when benchmarking.

Another benefit of testing a sample of incumbents is that in doing so, companies are conducting what is known as a "local validity" study that should confirm and quantify the correlation between test results and job performance. Again, the testing provider should be able to offer insights on how best to do this. The goal is to demonstrate that the test is a valid predictor of performance for that particular organization and for the specific position for which the tests are being used. When performing local validity studies, it is especially important to examine the data as a whole, and to do so in a statistically rigorous way.

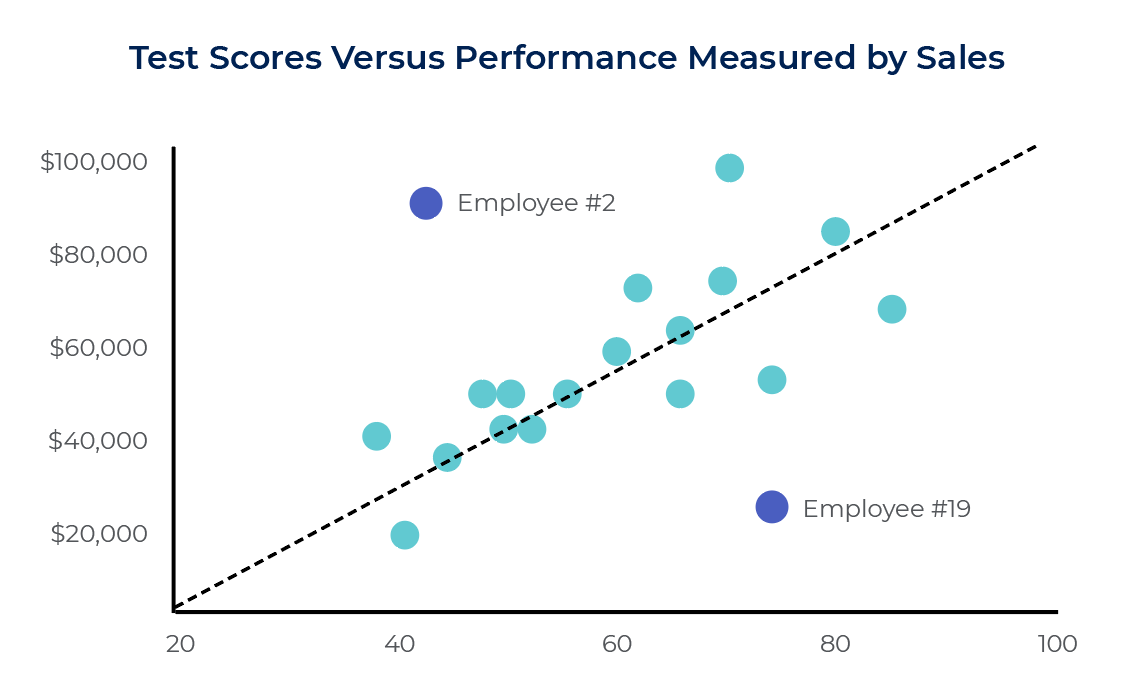

Occasionally, testing existing employees might lead to some unanticipated results. For example, a company might test its employees and realize that one of its top performers failed the exam. This is not cause for alarm. Top-performing employees DO occasionally fail tests, which is perfectly understandable considering that no single test is a perfect predictor of performance. However, in most cases, these employees represent outliers when compared to the entire data set. It is important to evaluate the predictive accuracy of selection tools by analyzing the whole data set to see how well the test predicted performance across the sample population. In most cases, the overall correlation between test score and job-performance is strong. Calculating the correlation coefficient is a great way to combat "the curse of the anecdote;" letting one prominent data point obscure the trend that represents the real story of this data set.

Figure 7: There is a clear trend in the data where higher scores on the test correlate with higher sales figures. However, Employee #2 and Employee #19 are clear outliers. Employee #2 performed poorly on the test, but very well on the job. Conversely, Employee #19 performed well on the test, but poorly on the job.

Establish Minimum (or Maximum) Cutoff Scores

Administering tests to current employees allows employers to set minimum or maximum cutoff scores to filter out unqualified applicants. Establishing a hard cutoff is particularly effective if a company has large applicant pools – in these cases, employers can afford to be selective, and cutoffs can be a huge time saver.

When determining where to set minimum cutoff score, companies should use the information gathered while testing current employees. There are a number of factors that go into setting appropriate cutoff scores, and the testing provider should be able to help with this process. But it is important to understand that there is no "magic number" for which anyone who scores above it will be a good fit, and anyone who scores below it is incapable of doing the job. However an organization chooses to set its cutoff scores, this is not how they work.

For example, imagine an employer is using a cognitive aptitude test to hire salespeople for an organization. The higher the cutoff is set, the more likely it is that people above the cutoff will have the critical thinking and problem-solving skills necessary to perform well in the job. If the employer's only concern is maximizing the hiring accuracy rate, then a high cutoff score would make sense. However, setting it too high is inadvisable because doing so will eliminate many capable applicants and run the risk of filtering out too many qualified people. For hiring managers and recruiters who hire large numbers of people, this would be frustrating and counterproductive. In a sense, setting cutoff scores is part art and part science. To determine where the cutoff should be, a company needs to take into account the specific dynamics of its own hiring process, such as the size of its applicant pools, the applicant-to-hire ratio, and other factors. The takeaway here is simple. Using a minimum cutoff score can help minimize the risk of bad hires; the higher the cutoff score used, the lower the risk of a bad hire.

Minimum scores serve a clear function in the testing process, but what about maximum scores? Using maximum cutoff scores is generally NOT recommended. That is, it is not recommended to exclude someone because he or she scored too high on an aptitude test, for example. The research is not yet clear enough on the benefits of setting maximum cutoff scores.

Why would anyone consider excluding someone for being too smart? The theory goes like this. Some testing companies believe that scoring above the expected range on an aptitude test can be an indicator that a person will be bored by a particular job and want to move on, and that higher aptitude people will also have more opportunities to find other jobs than will lower aptitude employees. Essentially, the idea is that whereas low scores signify that candidates are a risk for involuntary turnover — because they may not be trainable or able to perform well — extremely high scores can be an indicator of risk for voluntary turnover. But the evidence that overqualified employees represent a greater flight risk is not very strong, and in fact one study refutes it pretty convincingly. Therefore, unless a company has a specific reason for doing so, it is generally not recommended to use maximum cutoff scores.